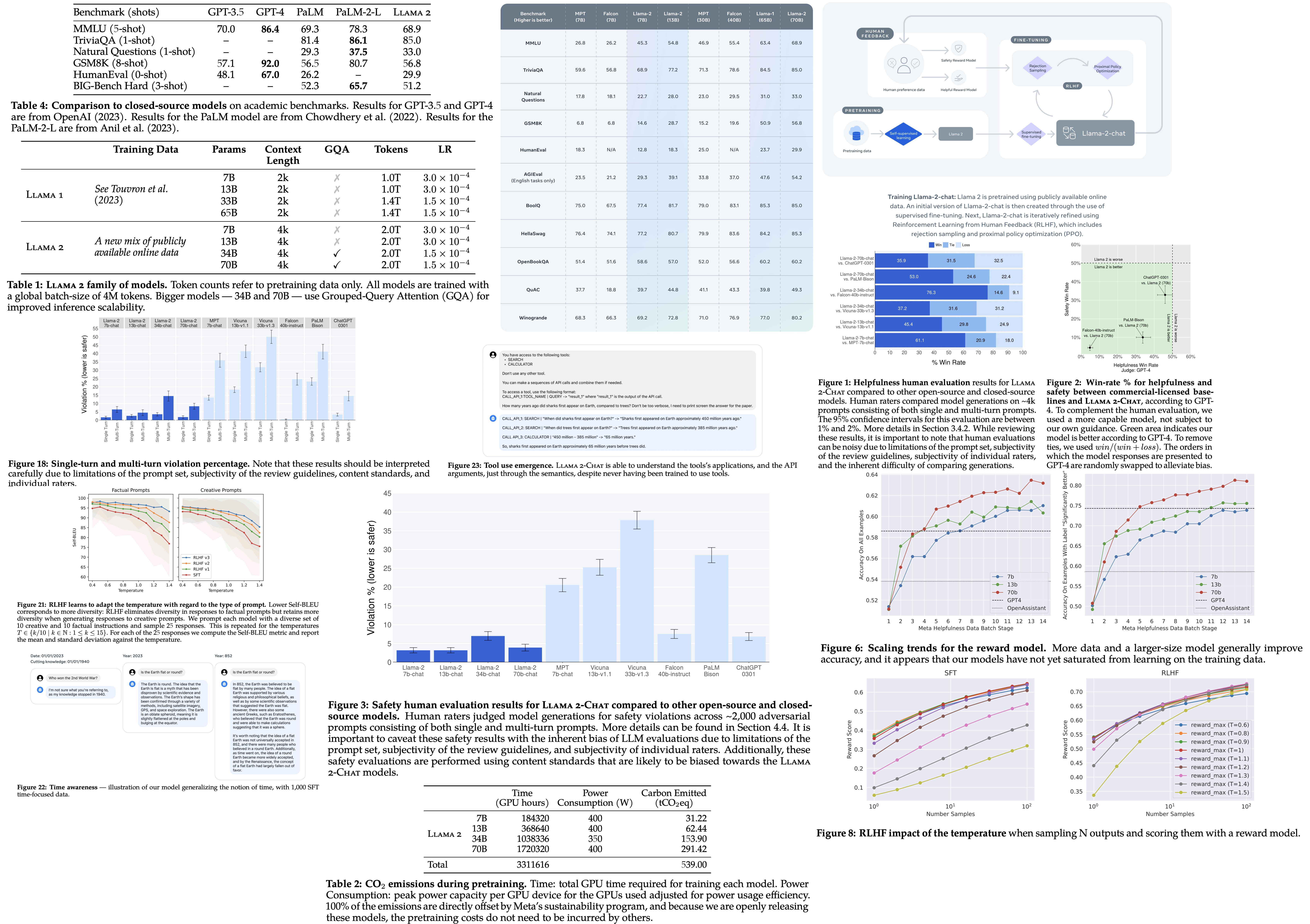

The Llama 2 LLM was pretrained on publicly available online data sources says Meta The fine-tuned model Llama-2-chat leverages publicly available instruction datasets and over. In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. Llama 2 was pretrained on publicly available online data sources The fine-tuned model Llama Chat leverages publicly available instruction datasets and over 1 million human annotations. Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters This is the repository for the 70B fine-tuned model optimized for. Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with sizes from 7 billion to 70 billion parameters Below you can find and download LLama 2..

Download Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with sizes from 7 billion to 70 billion parameters Below you can find and download LLama 2. Pip install trl Demo links for Code Llama 13B 13B-Instruct chat and 34B The Models or LLMs API can be used to easily connect to all popular LLMs such as Hugging Face or Replicate where all. Meta released Llama 2 in the summer of 2023 The new version of Llama is fine-tuned with 40 more tokens than the original Llama model doubling its context length and. Download the specific Llama-2 model weights Llama-2-7B-Chat-GGML you want to use and place it inside the models folder Download only files with GGML in the name. All three model sizes are available on HuggingFace for download Llama 2 models download 7B 13B 70B Llama 2 on Azure 16 August 2023 Tags Llama 2 Models All three Llama 2..

Understanding Llama 2 and Model Fine-Tuning Llama 2 is a collection of second-generation open-source LLMs from Meta that comes with a. In this tutorial we show how to fine-tune the powerful LLaMA 2 model with Paperspaces Nvidia Ampere GPUs. Additionally Llama 2 models can be fine-tuned with your specific data through hosted fine-tuning to enhance prediction accuracy for. Torchrun --nnodes 1 --nproc_per_node 4 llama_finetuningpy --enable_fsdp --use_peft --peft_method lora --model_name. How to Fine-tune Llama 2 with LoRA for Question Answering A Guide for Practitioners By Deci Research Team September 5 2023 10 min read..

Fine-tune Llama 2 with DPO a guide to using the TRL librarys DPO method to fine tune Llama 2 on a specific dataset Instruction-tune Llama 2 a guide to training Llama 2 to. This blog-post introduces the Direct Preference Optimization DPO method which is now available in the TRL library and shows how one can fine tune the recent Llama v2 7B-parameter. The tutorial provided a comprehensive guide on fine-tuning the LLaMA 2 model using techniques like QLoRA PEFT and SFT to overcome memory and compute limitations. In this blog post we will look at how to fine-tune Llama 2 70B using PyTorch FSDP and related best practices We will be leveraging Hugging Face Transformers. This tutorial will use QLoRA a fine-tuning method that combines quantization and LoRA For more information about what those are and how they work see this post..

Comments